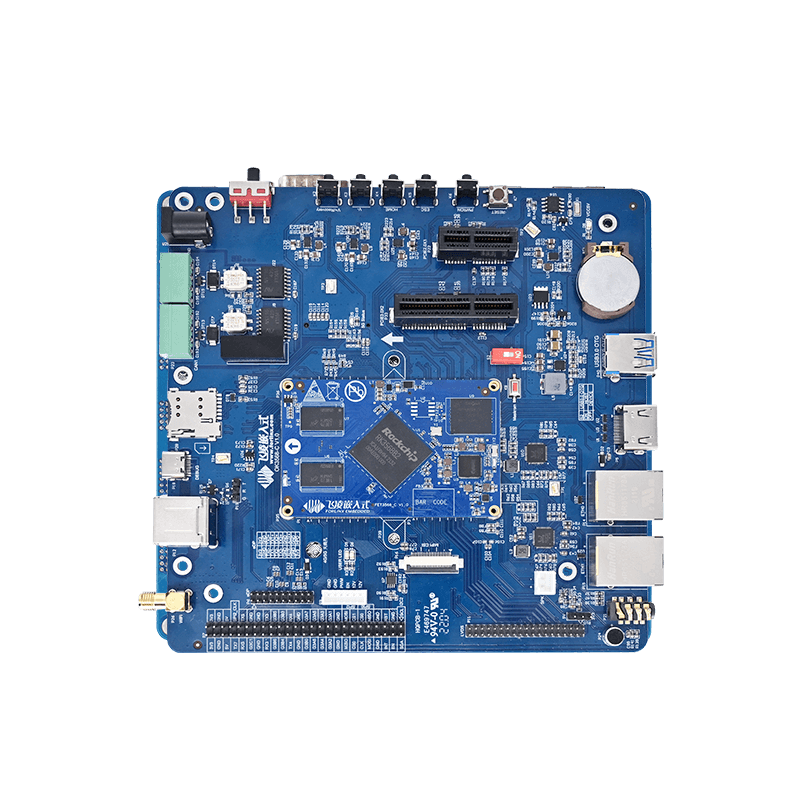

OK3568 Platform Electric Bicycle Recognition Solution: From Model Training to Hardware Deployment

Training Your Own YOLOv5 Model

1. Environment Preparation

1.1 Install the Required Software

The following steps are performed in a Windows 11 environment:

Required Software:

Anaconda (Python virtual environment management tool)

Git (to clone the YOLO source code)

PyCharm (Python IDE)

Anaconda Installation:

https://blog.csdn.net/Natsuago/article/details/143081283?spm=1001.2014.3001.5501

PyCharm Installation:

Git Installation: https://blog.csdn.net/weixin_45811256/article/details/130925392

1.2 Clone YOLO Repository

Navigate to the directory where you want to store YOLO on your Windows system, open the Command Prompt (cmd), and enter the following command to clone the repository:

git clone https://github.com/ultralytics/yolov5

1.3 Set Up a Virtual Python Environment and Install Dependencies

conda create -n gityolov5 python=3.8 -y //It is strongly recommended to use Python 3.8, as other versions may cause errors.

Install Dependencies: Navigate to the YOLO directory and activate the Python 3.8 virtual environment.

J:\yolov5>conda activate gityolov5 //Enter the environment just created (gityolov5) J:\yolov5>pip install -r requirements.txt -i https://mirrors.huaweicloud.com/repository/pypi/simple //Install required dependencies

Setup is complete. Now test if it runs successfully:

Open the downloaded yolov5 folder in PyCharm.

Open Settings and add a Python interpreter.

Note: It's usually under the directory where Conda is installed, or specifically under the envs folder of that path, containing the name of the virtual environment you created:

Open the train.py file and click ''Run''

If you see results similar to the example image you have, the environment is successfully installed.

2. Dataset Preparation

Due to version compatibility issues, it is recommended to install a new Python 3.9 environment specifically for this step ( other versions may have conflicts; Python 3.9 is strongly recommended).

J:\yolov5>conda create -n label python=3.9 -y J:\yolov5>conda activate label (label) J:\yolov5>pip install labelimg -i https://mirrors.huaweicloud.com/repository/pypi/simple (label) J:\yolov5>labelimg

The labeling tool LabelImg is now ready:

The following task involves labeling the material images and storing them.

Create the following directory:

datasets -> (Project name, e.g., ''bike'' used here)

There are two folders under both the images and label directories. They are train and val. Train stores resources used for training, and Val stores resources used for validation. You can think of train as practice questions and Val as exam papers.

Images stores picture files, and labels stores annotation information. The labels are txt files containing coordinates.

The following is the annotation process:

C:\Users\2020>conda activate label (label) C:\Users\2020>labelimg //Open the annotation tool

For the training set (train): Open the directory containing the training images.

Open the directory for saving the training annotation files.

First, ensure the format is set to YOLO.

Then, click "Create RectBox" to draw a bounding box around the target object. Finally, set the object label.

Press Ctrl + S to save the annotation, then click the next image in the list at the bottom right corner. Repeat this process for all images. label

The annotation process for the Val (validation) set is the same.

Train the model

Create a configuration file in the yolov5/data directory.

# Train/val/test sets as 1) dir: path/to/imgs, 2) file: path/to/imgs.txt, or 3) list: [path/to/imgs1, path/to/imgs2, ..] path: J:\yolov5\datasets\bike2 # dataset root dir train: images/train # train images (relative to 'path') val: images/val # val images (relative to 'path') test: # test images (optional) # Classes nc: 1 # number of classes names: ['bike'] # class names

Download YOLOv5 Pretrained Model:

https://github.com/ultralytics/yolov5/releases

Edit Configuration:

In the red box, add the following:

--weights yolov5s.pt --data data/bike.yaml --workers 1 --batch-size 8

Click "Run" to start training.

Output Location:

The model will be saved at exp3/weights/best.pt.

Manual Verification:

Place the best.pt file into the yolov5 folder.

J:\yolov5>conda activate rknnyolo (rknnyolo) J:\yolov5>python detect.py --weights best.pt --source datasets/bike2/images/train

detect: weights=['best.pt'], source=datasets/bike2/images/train, data=data\coco128.yaml, imgsz=[640, 640], conf_thres=0.25, iou_thres=0.45, max_det=1000, device=, view_img=False, save_txt=False, save_format=0, save_csv=False, save_conf=False, save_crop=False, nosave=False, classes=None, agnostic_nms=False, augment=False, visualize=False, update=False, project=runs\detect, name=exp, exist_ok=False, line_thickness=3, hide_labels=False, hide_conf=False, half=False, dnn=False, vid_stride=1 YOLOv5 v7.0-418-ga493afe1 Python-3.8.20 torch-2.4.1+cpu CPU Fusing layers... Model summary: 157 layers, 7012822 parameters, 0 gradients, 15.8 GFLOPs image 1/97 J:\yolov5\datasets\bike2\images\train\001.jpg: 384x640 1 bike, 59.2ms image 2/97 J:\yolov5\datasets\bike2\images\train\002.jpg: 384x640 1 bike, 51.2ms image 3/97 J:\yolov5\datasets\bike2\images\train\003.jpg: 384x640 1 bike, 52.3ms image 4/97 J:\yolov5\datasets\bike2\images\train\004.jpg: 384x640 1 bike, 56.6ms

Test Results:

II. Deploy and Convert Model to RKNN

Use the following command to convert .pt to .onnx:

Modify the file:

Modify the file:

(gityolov5) J:\yolov5>pip install onnx==1.13 -i https://mirrors.huaweicloud.com/repository/pypi/simple (gityolov5) J:\yolov5>python export.py --weights best.pt --img 640 --batch 1 --include onnx

Make the necessary modifications in the configuration file.

ubuntu22.04:

Install anaconda:

forlinx@ok3568:~$ sudo apt install libgl1-mesa-glx libegl1-mesa libxrandr2 libxrandr2 libxss1 libxcursor1 libxcomposite1 libasound2 libxi6 libxtst6 forlinx@ok3568:~$ sudo apt update forlinx@ok3568:~$ wget https://repo.anaconda.com/archive/Anaconda3-2024.10-1-Linux-x86_64.sh forlinx@ok3568:~$ bash Anaconda3-2024.10-1-Linux-x86_64.sh Welcome to Anaconda3 2024.10-1 In order to continue the installation process, please review the license agreement. Please, press ENTER to continue >>> //Press Enter, and there will be many messages printed. Press 'q' to exit and view them. Do you accept the license terms? [yes|no] >>> yes //Enter yes to agree to the terms after exit Anaconda3 will now be installed into this location: //Press Enter again /home/forlinx/anaconda3 forlinx@ok3568:~/anaconda3/bin$ export PATH=~/anaconda3/bin:$PATH forlinx@ok3568:~/anaconda3/bin$ source ~/anaconda3/bin/activate (base) forlinx@ok3568:~/anaconda3/bin$ source ~/.bashrc (base) forlinx@ubuntu22:~/anaconda3/bin$ conda create -n rknn python=3.8 //Or create a python 3.8 virtual environment (base) forlinx@ubuntu22:~/anaconda3/bin$ conda activate rknn

Download and deploy the rknn related repository

(rknn) forlinx@ok3568:~$git clone https://github.com/airockchip/rknn-toolkit2.git --depth 1 (rknn) forlinx@ubuntu22:~/rknn-toolkit2/rknn-toolkit2/packages/x86_64$ pip install rknn_toolkit2-2.3.2-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl -r -i https://mirrors.huaweicloud.com/repository/pypi/simple

Conversion:

To modify a file test. py:

(rknn) forlinx@ubuntu22:~/rknn-toolkit2/rknn-toolkit2/examples/onnx/yolov5$ vi test.py

import os

import urllib

import traceback

import time

import sys

import numpy as np

import cv2

from rknn.api import RKNN

# Model from https://github.com/airockchip/rknn_model_zoo

ONNX_MODEL = 'best.onnx' //onnx model

RKNN_MODEL = 'best.rknn' //Generate rknn model

IMG_PATH = './test.jpg' //Test the image path

DATASET = './dataset.txt'

QUANTIZE_ON = True

OBJ_THRESH = 0.25

NMS_THRESH = 0.45

IMG_SIZE = 640

CLASSES = ("bike") //Label

if __name__ == '__main__':

# Create RKNN object

rknn = RKNN(verbose=True)

# pre-process config

print('--> Config model')

rknn.config(mean_values=[[0, 0, 0]], std_values=[[255, 255, 255]], target_platform='rk3568')

print('done')

# Load ONNX model

print('--> Loading model')

ret = rknn.load_onnx(model=ONNX_MODEL)

if ret != 0:

print('Load model failed!')

exit(ret)

print('done')

# Build model

print('--> Building model')

ret = rknn.build(do_quantization=QUANTIZE_ON, dataset=DATASET)

if ret != 0:

print('Build model failed!')

exit(ret)

print('done')

# Export RKNN model

print('--> Export rknn model')

ret = rknn.export_rknn(RKNN_MODEL)

(rknn) forlinx@ubuntu22:~/rknn-toolkit2/rknn-toolkit2/examples/onnx/yolov5$ python test.py I rknn-toolkit2 version: 2.3.2 --> Config model done --> Loading model I Loading : 100%|██████████████████████████████████████████████| 120/120 [00:00<00:00, 19261.28it/s] done --> Building model D base_optimize ... D base_optimize done. D fold_constant ... D fold_constant done. D correct_ops ... D correct_ops done. Conversion completed, and best.rknn is generated in the current directory.

III. Deployment of the Development Board Runtime Environment:

forlinx@ok3568:~$sudo apt update forlinx@ok3568:~$sudo apt install git cmake forlinx@ok3568:~$git clone https://bgithub.xyz/rockchip-linux/rknpu2.git

Modify the file to change the label to bike only

forlinx@ok3568:~/rknpu2/examples/rknn_yolov5_demo$ vi model/coco_80_labels_list.txt

Modify this file and set the number of detection targets to one.

forlinx@ok3568:~/rknpu2/examples/rknn_yolov5_demo$ vi include/postprocess.h

forlinx@ok3568:~/rknpu2/examples/rknn_yolov5_demo$ bash build-linux_RK3566_RK3568.sh

At this point, the environment setup is complete.

Copy the images for inference and the RKNN model to the development board. Execute the following command: An out.jpg file will be generated in the current directory.

forlinx@ok3568:~/rknpu2/examples/rknn_yolov5_demo/install/rknn_yolov5_demo_Linux$ ./rknn_yolov5_demo best.rknn train/006.jpg