LLM + VLM Multimodal Visual Assistant Based on RK3576 SoM

In areas like smart power, intelligent transportation, and industrial inspection, embedded devices act as essential "perception terminals" for collecting image information and performing intelligent analysis. Accurate image understanding is essential for enhancing the intelligent capabilities of various scenarios and ensuring the safe and efficient operation of both production and daily life. This involves identifying standardized operations and potential safety hazards in power inspection, interpreting the meanings of traffic signs and monitoring road conditions in traffic situations, as well as categorizing objects and identifying defects in industrial environments.

Traditional embedded solutions face limitations due to model architectures and computing power bottlenecks. These challenges result in issues such as inadequate recognition accuracy, slow response times, and high adaptation costs. Consequently, these solutions struggle to meet the intelligent requirements of precision, efficiency, and universality. In this context, Forlinx Embedded has developed a multimodal large model image understanding assistant based on the RK3576 SoM. It combines large language models (LLMs) and visual language models (VLMs) to create an "intelligent visual center" for embedded devices. This integration allows terminal devices to genuinely "understand" the complexities of the world around them.

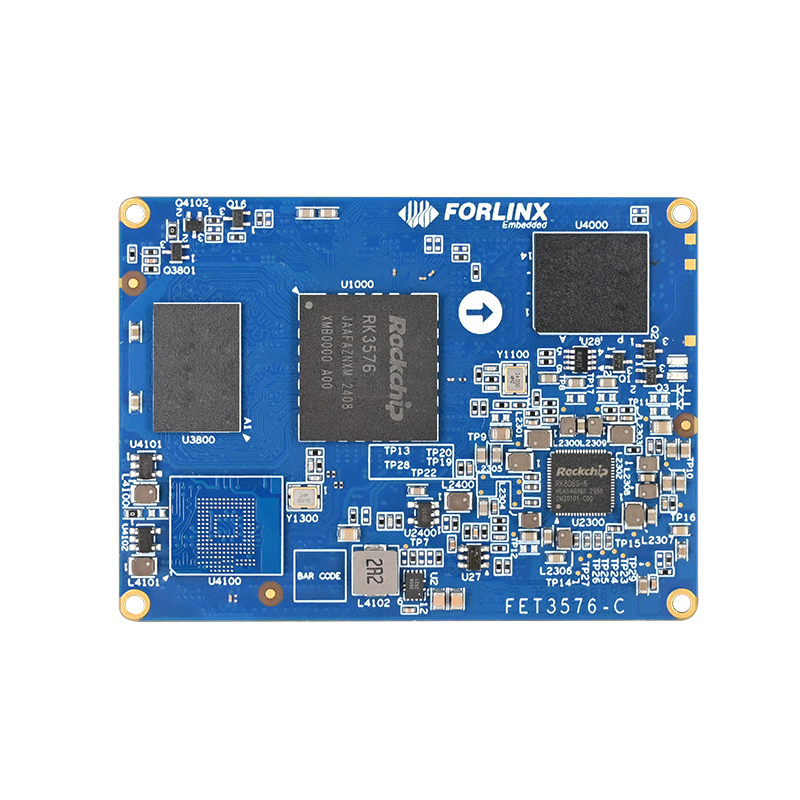

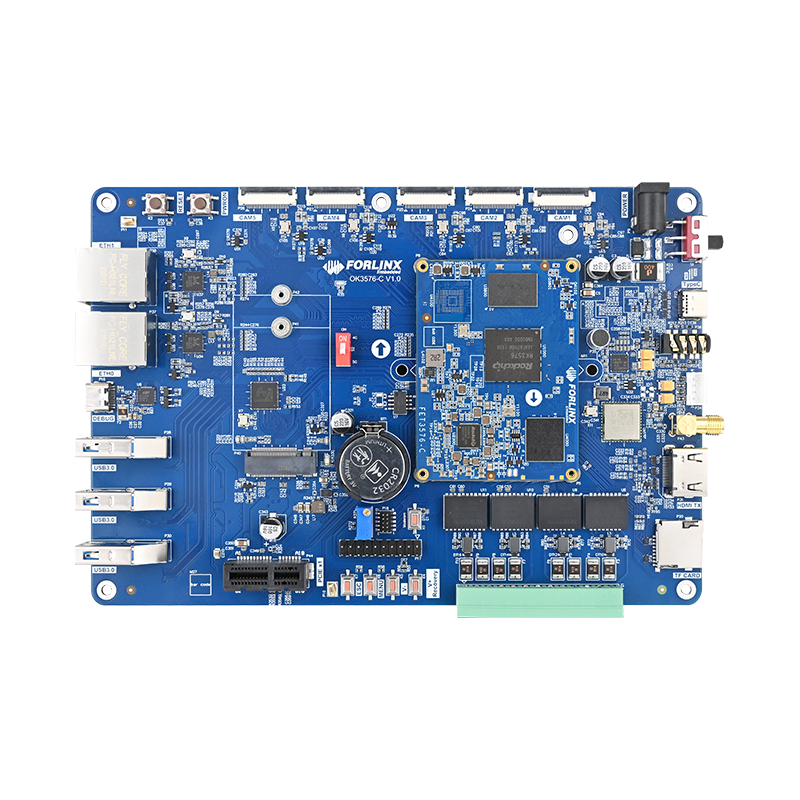

FET3576-C SoM Multimodal Large Model Image Understanding Assistant

1. FET3576-C SoM Advantages

Forlinx Embedded´s FET3576-C System on Module (SoM) is developed based on the Rockchip RK3576 processor. This high-performance, low-power application processor is specifically designed by Rockchip for the AIoT and industrial markets. It features a combination of 4 ARM Cortex-A72 and 4 ARM Cortex-A53 high-performance cores, as well as a built-in Neural Processing Unit (NPU) that delivers impressive computing power of 6 TOPS. This capability enables the board to efficiently run large language models and multimodal models of various parameter scales, enhancing your AI applications.

2. Multimodal Large-Model Architecture

Forlinx Embedded’s multimodal large model seamlessly integrates a large language model (LLM) based on the Transformer architecture with a large visual language model (VLM). This combination forms a coherent multimodal system architecture that promotes efficient collaboration. The LLM and the visual model operate together within a unified framework, enabling a comprehensive understanding and effective response to complex tasks.

Core Architecture of the Multimodal Large Model

01 Visual Encoder: The Image "Translator"

Having a visual encoder is akin to giving an embedded terminal "eyes." It converts the original image into digital signals that machines can understand.

Consider the example of a photo featuring a power worker climbing a utility pole. The visual encoder will first extract key information from the image, such as the shape of the utility pole, the worker´s movements, and the background scenery. It then translates this visual content into a "universal language" that the embedded device can understand, which lays the groundwork for further analysis. Compared to traditional CNN models, Transformer-based visual encoders better capture long-distance dependencies, significantly enhancing target recognition accuracy in complex scenarios.

02 Projector: The Information "Converter"

As a "bridge" between vision and language, the projector further converts the image signals processed by the visual encoder into a format that the large language model can understand.

It is like a "converter" that can repackage and adjust the digital signals of the image, enabling the large language model to "understand" what the image is saying, thus paving the way for subsequent language understanding work. The multimodal large model enables seamless connection between visual and language information by constructing a unified representation space, avoiding the problem of information fragmentation in traditional systems.

03 Large Language Model: The Content "Creator"

After receiving the "image understanding package" from the projector, the large language model, which can be regarded as a "textual brain", starts to work. According to user instructions, such as "generate a description of the image", it converts the image information into natural language that humans can understand. It analyzes the logic of the picture and organizes descriptions like "This image shows a power worker maintaining or inspecting a tall utility pole…", completing the conversion from image to text and endowing the embedded device with the ability to output natural language.

To sum up, the collaboration process of the above three modules is:

The visual encoder "views" the image, the projector "transforms" the signals, and the large language model "describes" the content. This enables embedded devices to evolve from simply "seeing the picture" to "clearly explaining its meaning", achieving multimodal image understanding.

3. Effect demonstration: Precise, efficient, and intelligent

As a cutting-edge solution for the combined processing of images and text, Forlinx Embedded´s multimodal large model has powerful capabilities in image description generation, visual question-answering, and visual reasoning compared with traditional image algorithms:

01. Powerful image semantic understanding and generation capabilities

Forlinx Embedded´s multimodal large model can integrate image content with natural-language instructions. It understands objects and their relationships in the context and provides coherent and hierarchical descriptions. It breaks through the limitations of traditional algorithms, which can only identify objects. Most traditional image models can only perform single pre-defined tasks and have difficulty in in-depth semantic understanding and context association of images.

02. Precise interactive understanding and visual question-answering capabilities

The multimodal large model can accurately understand the user´s image-related questions. Based on in-depth understanding, it can give correct answers and dynamically adjust the answering strategy according to the questions to meet diverse question needs. It makes up for the shortcomings of traditional algorithms, which require pre-designed solutions for specific visual questions, have poor flexibility in dealing with new questions, and have insufficient accuracy in answering complex questions.

03. Higher-level abstract thinking and visual reasoning capabilities

Forlinx Embedded´s multimodal large model can analyze relationships such as the position and causality of objects in the image and complete complex reasoning. As shown in the figure below, it can analyze and predict potential dangers and safety hazards in the scene. In contrast, traditional algorithms mainly focus on the recognition and classification of specific elements in the image and have difficulty performing tasks involving the analysis of complex relationships between multiple objects.

In addition, Forlinx Embedded´s multimodal large model also has good bilingual understanding ability in both Chinese and English, which is suitable for the practical needs of cross-language communication, international cooperation, or multi-language user groups.

In summary, when dealing with complex visual tasks, Forlinx Embedded´s multimodal large model shows significant advantages in semantic understanding, interaction flexibility, and advanced reasoning capabilities, far exceeding traditional computer-vision methods. These capabilities make it more intelligent and efficient in understanding and processing data containing various information forms.

4. Conclusion

Forlinx Embedded's multimodal large-model image understanding assistant successfully integrates the two major fields of language and vision, achieving the function of generating text from images during picture analysis. Its features of high generality, high accuracy, and scalability give it broad prospects in practical applications. With technological progress and the expansion of application scenarios, it is believed that multimodal large models will play an important role in more fields.