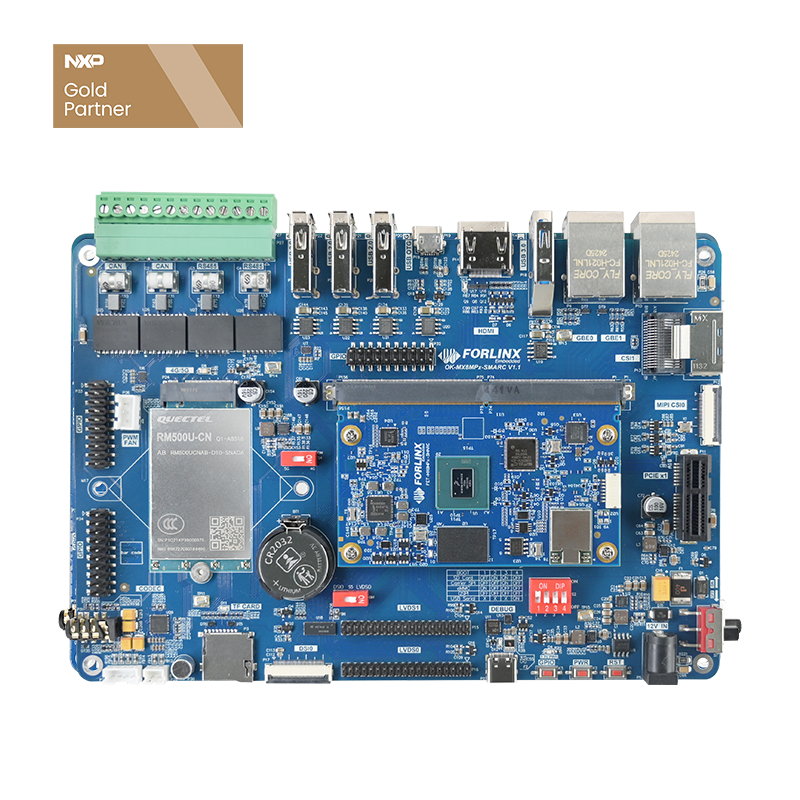

Porting of MobileNetV3 Model and Implementation of Handwritten Digit Recognition Based on OKMX8MP-C (Linux 5.4.70)

This article details how to port and run the MobileNetV3 model on the Forlinx OKMX8MP-C embedded platform to achieve the function of handwritten digit recognition. From dataset import, model training and validation to TensorFlow Lite quantization and deployment, it fully demonstrates the usage process of the eIQ Portal tool. Through this case, readers can quickly learn how to implement edge inference of lightweight deep-learning models on an industrial-grade ARM platform.

1. Importing the Dataset

Before training the model, you need to prepare the dataset first. If you don't have a dataset, you can directly click ''Import dataset'' and select the dataset provided in the tool. (If you have your own dataset, you can click ''Create a blank project'' to directly import it.) As shown in the figure below.

This paper uses the dataset provided by the tool. Use the tool to load the dataset from TensorFlow, as shown below.

You can select the provided datasets from the drop - down menu in the upper - left corner. These are all datasets commonly used in TensorFlow. This paper uses the mnist dataset.

The mnist dataset has 60,000 handwritten digits as the training set and 10,000 handwritten digits as the validation set. In addition, there are three other datasets:

- cifar10: It contains color images of 10 classes, with 50,000 images as the training set and 10,000 images as the validation set.

- horses_or_humans: horses_or_humans: It has 2 classes, humans and horses. There are 1,027 images of humans and 1,027 images of horses respectively.

- tf_flowers: It has 5 classes, with a total of 3,670 images of all kinds of flowers.

Also, there is a ''Problem type'' drop - down menu in the upper - left corner, which represents the type of task. As shown below, this version of the tool only provides two types, one is image classification and the other is object detection. In the object detection task, there is only one dataset, coco/2017. This dataset can detect 80 types of object recognition, with 118,287 images as the training set, 5,000 images as the validation set, and 20,288 images as the test set.

After selecting the dataset, click the ''IMPORT'' button, select the save directory, and wait for the import. As shown in the figure below:

Select the save directory.

Wait for the dataset to be imported.

After the import is completed, you can view the mnist dataset. The left side shows the number of each image and the image labels. The right side shows each image in the dataset. You can view the detailed information by selecting an image.

2. Model Training

After importing the dataset, the next step is to select a model. As shown below, click the ''SELECT MODEL'' button in the figure.

The interface for selecting a model is as shown below. In this interface, three different options are shown on the left, and their functions are as follows:

- RESTORE MODEL: Load the model used last time.

- BASE MODELS: Select the provided base models.

- USER MODELS: Select the models you created.

On the right, models with different functions are shown, such as classification models, image segmentation models, and object detection models.

This paper uses the base model provided by eIQ Portal, so select ''BASE MODEL'' as shown in the figure below.

The figure below shows several base models provided by the tool. This paper uses the mobilenet_v3 model. The structures of different models can be viewed through the ''MODEL TOOL'' on the initial page.

After selecting the model, enter the model training stage. Its interface is as shown below. The left side shows the parameters to be adjusted during the training process, including the learning rate, Batchsize, and epochs, etc. It can be adjusted as needed. The right side can show relevant information such as the accuracy and loss value during the training process.

The parameters for this training are as shown below. After selection, click ''Start training''.

The training process is as shown below. The accuracy and loss value of the model on the right can be intuitively viewed.

After the model training is completed, as shown in the figure below, you can set different ranges to view the information of steps.

3. Model Validation

After the model training is completed, the model needs to be validated. Select ''VALIDATE'' to enter the model validation stage. As shown in the figure below.

In the model validation interface, you also need to set the validation parameters, including the Softmax Threshold and some quantization parameters.

The parameters set in this paper are as follows. After setting, click ''Validate'' as shown below.

After the validation is completed, the confusion matrix and accuracy of the model will be displayed in the interface, as shown below.

4. Model Conversion

After the model training and validation are completed, in order to run the model on the OKMX8MP, the model needs to be converted into a file in the.tflite format. Therefore, the model needs to be converted. Click ''DEPLOY'' to enter the conversion interface. As shown in the figure below.

Select the export type from the left - hand drop - down menu. In this paper, the export format is the TensorFlow Lite format. At the same time, for lightweight purposes, the data types of the input and output are both set to int8. The parameters are set as shown below.

After setting the parameters, select ''EXPORT MODEL'' to export the model in the.tflite format, and then port the model in this format to the OKMX8MP.

5. Model Prediction

Before the model prediction, the following files need to be prepared.

- Mobilen_v3.tflite file

- The handwritten digit image files to be predicted.

- The Python script file for loading the model and image pre - processing.

Among them, the.tflite file can be successfully exported after model validation. You can select several handwritten digit image files from the dataset, or you can write them by hand and then convert them into 28x28 black - background white - character images. This paper uses the following 30 images for prediction, named in the form of ''group number_label''. As shown in the figure below.

Write a python script:

import numpy as np

from PIL import Image

import tflite_runtime.interpreter as tflite

# ---------------- Configuration ----------------

MODEL_PATH = "/home/root/mobilenet_v3.tflite"

IMAGE_PATHS = [

"/home/root/1_0.jpg",

''/home/root/1_1.jpg",

"/home/root/1_2.jpg",

"/home/root/1_3.jpg",

''/home/root/1_4.jpg",

"/home/root/1_5.jpg",

''/home/root/1_6.jpg",

"/home/root/1_7.jpg",

"/home/root/1_8.jpg",

"/home/root/1_9.jpg",

"/home/root/2_0.jpg",

''/home/root/2_1.jpg",

"/home/root/2_2.jpg",

"/home/root/2_3.jpg",

''/home/root/2_4.jpg",

"/home/root/2_5.jpg",

''/home/root/2_6.jpg",

"/home/root/2_7.jpg",

"/home/root/2_8.jpg",

"/home/root/2_9.jpg",

"/home/root/3_0.jpg",

''/home/root/3_1.jpg",

"/home/root/3_2.jpg",

"/home/root/3_3.jpg",

''/home/root/3_4.jpg",

"/home/root/3_5.jpg",

''/home/root/3_6.jpg",

"/home/root/3_7.jpg",

"/home/root/3_8.jpg",

"/home/root/3_9.jpg",

]

# ---------------- Load the model ----------------

interpreter = tflite.Interpreter(model_path=MODEL_PATH)

interpreter.allocate_tensors()

input_details = interpreter.get_input_details()

output_details = interpreter.get_output_details()

# Model input information

input_shape = input_details[0]['shape'] # [1, H, W, C]

height, width, channels = input_shape[1], input_shape[2], input_shape[3]

input_dtype = input_details[0]['dtype'] # np.float32 或 np.int8

# Quantization parameter (if int8)

scale, zero_point = input_details[0]['quantization']

# ---------------- Prediction ----------------

for img_path in IMAGE_PATHS:

# Open the picture and turn to RGB (3 channels)

img = Image.open(img_path).convert('RGB')

img = img.resize((width, height))

# Convert to numpy array

img_array = np.array(img, dtype=np.float32)

# If the training data is black on a white background, it can be reversed

#img_array = 255 - img_array

# Normalized to 0 ~ 1

img_array = img_array / 255.0

# Adjust the shape to [1, H, W, 3]

img_array = img_array.reshape(1, height, width, channels)

# If the model is a quantized int8, then transform

if input_dtype == np.int8:

img_array = img_array / scale + zero_point

img_array = np.round(img_array).astype(np.int8)

# Set the input

interpreter.set_tensor(input_details[0]['index'], img_array)

# reasoning

interpreter.invoke()

# Get output

output_data = interpreter.get_tensor(output_details[0]['index'])

predicted_label = np.argmax(output_data)

print(f''picture{img_path} prediction {predicted_label}")

Copy all three files into OKMX8MP, as shown in the following figure.

Enter the following command to make predictions:

python3 demo.py

The output result is as follows:

According to the output results, it can be seen that the image label of 3_9.jpg should be 9, while the model predicts 7, and all other images are predicted to be normal. 3_9 The. jpg image is as follows:

Based on the prediction results, it can be seen that the model trained this time has a high accuracy and still performs well when transplanted onto OKMX8MP.